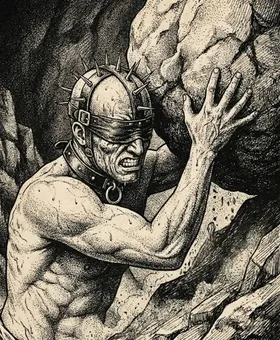

Visible incompetence as a legitimate state

Why I ordered water wrong and what it has to do with system design

This is the first text of this blog. It’s not a tutorial or a technical guide. It’s a real anecdote and an idea that has taken me years.

A clarification before continuing: in engineering, “hope” is usually an anti-pattern. “Hope is not a strategy.” I’ve heard it dozens of times. This text proposes something different: not hope as a plan, but design that doesn’t depend on everything going well to keep functioning.

A minimal failure in a real system

My first international trip was thanks to a scholarship to attend KubeCon Europe. I was going to Amsterdam. I had always felt fascination for Holland. I was also going to see an old friend. Everything seemed to align.

Except me.

I didn’t master English. Not then. Not completely now. Since university I had been thinking—still without a name—about something I now call Hope Oriented Programming. Not as a formal theory, but as a persistent intuition: many systems are designed for ideal humans, not real humans.

For two months I studied English with discipline. I convinced myself that preparation was enough.

The first failure occurred before landing.

On the plane, a flight attendant asked me what I wanted to drink. I answered nervously:

I would like a weiter.

I wanted water. She didn’t understand me. She asked me three times. I didn’t understand why she didn’t understand me… until I understood: I had pronounced water incorrectly.

It was a small shame, but very clear.

The flight attendant understood, gave me water, and continued with her work. The system continued.

The state matters more than the error

That moment wasn’t important because of the mispronounced word, but because of the state I was in.

I had studied. I knew the word. But I failed in real time and in public.

It wasn’t total ignorance. It was visible incompetence.

That state is uncomfortable, frequent, and poorly tolerated. And yet, it’s the natural state of real learning.

A definition thought through for years

Over time I understood that scene summarized an idea I’ve been working on since university, in different contexts: study, work, teams, travel, systems. I’ve seen it repeat itself both leading engineering teams at a startup and as the research assistant who provided lab computing resources to students at every stage —from undergraduates to postdocs— each with their own ways of failing and learning.

After several years thinking about it, I arrived at this definition:

Hope Oriented Programming doesn’t design systems expecting everything to go well; it designs systems that don’t collapse when things inevitably go wrong.

It’s not optimism. It’s not motivation. It’s design for continuity.

Systems that only tolerate competent humans

Many systems fail not because of technical errors, but because of incorrect assumptions.

I’ve seen CI/CD pipelines where a typo in a variable name blocks the deploy without a clear message. I’ve seen PRs where the reviewer exposes the error in a public comment before asking if there was context. I’ve seen onboardings that assume the new person “should already know” how the internal permissions system works.

The result is predictable: people fake confidence, ask privately, or give up.

A well-designed system doesn’t require human perfection to operate. It tolerates clumsy errors. It tolerates intermediate states. It tolerates friction.

The flight attendant didn’t correct. Didn’t expose. Didn’t collapse. Adapted.

That wasn’t kindness. It was resilience.

When large systems fail

There’s something curious about large and critical systems: we don’t remember them for the time they work, but for the exact moment they fail.

Nobody writes when GitHub has been available for months. But everyone remembers when GitHub went down.

Not because it’s fragile, but because it’s central.

That’s where this type of design becomes visible: not in perfect uptime, but in the failure. In how it’s communicated. In how it’s learned from. In how it continues.

For roles like SRE this is evident: systems aren’t designed because they won’t fail, but because they will fail.

Resilience isn’t demonstrated in stability. It’s revealed under stress.

Closing

Hope Oriented Programming doesn’t propose eliminating error or romanticizing it. It proposes integrating it without humiliation and without collapse.

Hope here is not an individual emotion. It’s an emergent property of the system.

This text is not a destination.

It’s the first visible failure of this blog: publishing before having everything figured out.

If the system works, there will be a post 1.